Addressing AI Cooling and Infrastructure Challenges in Indonesia

The era of artificial intelligence (AI) has brought about a revolution, reshaping the landscape of innovation from drug discovery to global supply chain optimization. However, this surge in computing power comes with significant consequences, extending far beyond initial capital investment. Data centers across Indonesia, and globally, are scrambling to keep up as legacy infrastructure buckles under AI’s insatiable energy and cooling demands. The industry recognizes this pressing problem. Data center operators in Indonesia, supported by the expertise of leading Distributor Cooling Data Center like Climanusa, are now investing in higher-density deployments and innovative cooling technologies.

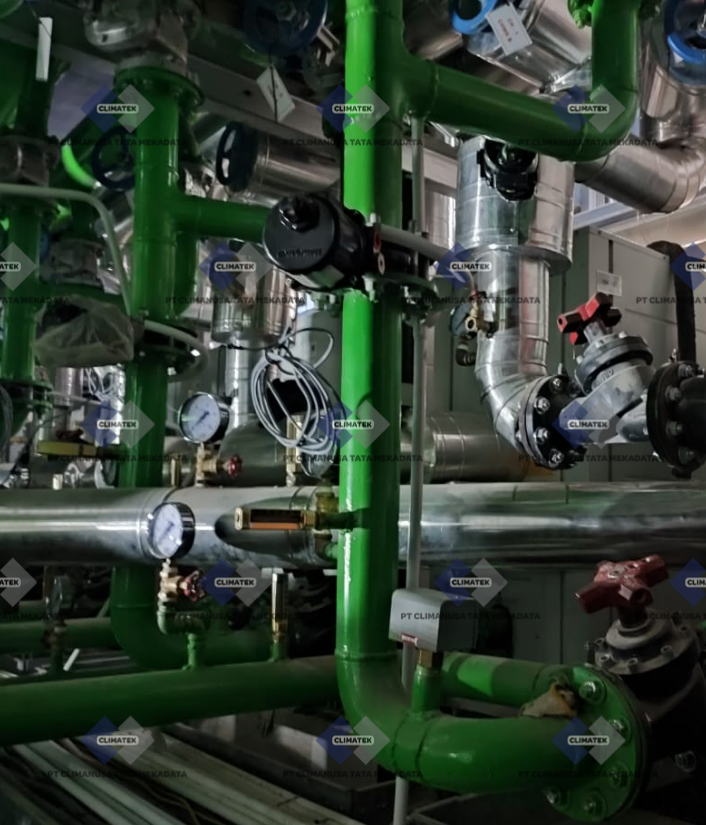

Yet, too often, the focus is narrow: more density, more cooling. This partial approach ignores the critical interplay between cooling, power, network, and structural design—a misstep that threatens to cripple data centers in Indonesia before AI even reaches its full potential. The brutal reality is that AI racks are demanding 30-120kW each, yet 78% of data centers still rely on air cooling, which tops out at 15-20kW per rack. High-density deployments need more power, but most IT rooms are stuck with 200-250A power distribution panelboards—far below what’s required. More hardware means heavier cabinets. The industry in Indonesia is looking at 6,000lb+ racks, yet many raised floors and risers are built for half that weight. Operators trying to retrofit facilities with liquid cooling are running into physical constraints—walls that weren’t designed for pipes, hallways too narrow for new systems, raised floors too congested for piping, and lack of support to route water distribution overhead. The result? Grid strain. Water scarcity. Months—sometimes years—of construction. Exploding costs. Operational instability. AI is here, and with the support of a visionary Distributor Cooling Data Center, the key question is whether data centers in Indonesia can evolve fast enough to keep up.

Cooling: The End of Air Cooling As We Know It

Legacy air-cooled systems, built for 5-10kW racks, crumble under AI’s thermal load. Consider modern GPUs: each chip generates 1,000W+ of heat, and a 70kW rack can house over 60 of these chips. Air cooling simply doesn’t have the thermal conductivity to keep up. Liquid cooling, on the other hand, is up to 3,000x more efficient at carrying heat. Direct-to-chip solutions surgically remove heat at the source—CPUs, GPUs, and memory—without recirculating it into the environment. Operators in Indonesia sticking to air cooling face massive risks. Firstly, performance throttling: overheating slashes AI training speeds. Secondly, hardware mortality: repeated thermal cycling degrades GPUs 2-3x faster. Thirdly, energy waste: air-cooled AI racks burn 20-30% more power for the same compute output. This isn’t a future problem; it’s a problem that every Distributor Cooling Data Center in Indonesia must address immediately.

AI-ready data centers require a holistic design approach. Cooling alone won’t fix the problem. Power constraints, structural limitations, and thermal management all have to be addressed together. This is where Climanusa, as a leading Distributor Cooling Data Center in Indonesia, provides integrated solutions and expert consultation to ensure that every aspect of data center infrastructure is meticulously considered. They don’t just supply components; they provide the expertise to design and implement robust systems.

Power: The Failing Infrastructure and its Solutions

The grid wasn’t built for AI. Most data centers in Indonesia still operate with 200-250A power panels—a relic of pre-AI workloads. Meanwhile, AI racks are guzzling 50-120kW each. That means operators hit a hard limit: you run out of power before you run out of racks. And the challenge doesn’t stop at the data center door. Municipal grids were not designed for round-the-clock fluctuation of power consumption from AI workloads. Even in power-heavy hubs within Indonesia, utilities are buckling under the strain. If demand response programs don’t scale up fast, expect skyrocketing energy costs, longer deployment delays, and a whole lot more finger-pointing at regulators. The bottom line? If operators aren’t planning for power at scale, they’re planning to fail.

There’s no easy fix. Retrofitting for AI isn’t just about upgrading electrical panels—it’s a total system upheaval. Row-level panel boards need to jump to 400A+ systems. Rewiring and transformer overhauls are non-negotiable. The entire process demands months of planned downtime, millions in CapEx, and a near-complete operational reset. This is where Climanusa, as a Distributor Cooling Data Center that also excels in power solutions, can provide the right guidance and technology to ensure data centers are AI-ready. They understand that power and cooling solutions must be integrated, not viewed as separate entities.

Hardware and Structural Design: Pushing the Limits of Physics

As Huang’s Law continues to prove itself, AI’s hardware demands are rewriting the rules of data center engineering, forcing a reckoning with physics and material science. As GPUs continue their exponential climb in power, heat is becoming the single biggest disruptor—impacting everything from fiber connections to power distribution and even the materials used in racks. And the numbers are staggering. The thermal properties of every cabinet now come into play—how many can function without boiling off the very water used to cool them? If GPU trays keep climbing to 400-500kW, the industry in Indonesia is running headfirst into a thermal wall. As an expert puts it: “At some point, the industry will need to take a page from supercomputers and get nearly every item in the cabinet covered in liquid to operate effectively. Liquid nitrogen or other chemicals even, which is a whole other issue.”

Right now, the science simply isn’t there. But the problem is. The industry is staring down the very real possibility of catastrophic hardware failures—not from manufacturing defects but from heat itself. Legacy facilities, built for 6-12kW racks, crumble under AI’s weight—literally. Most, if not nearly all, data centers in Indonesia currently have raised floors. Once a standard for effectively cooling and managing cabling, it has become a nightmare for retrofitting cabinets and racks crossing the threshold into the 6,000lb+ range. Similar issues apply while operators attempt to retrofit walls for the necessary piping to cool racks without sacrificing structural integrity. Climanusa, as a comprehensive Distributor Cooling Data Center, not only focuses on cooling solutions but also understands the structural implications of new technology deployments, ensuring that the solutions offered are compatible with the physical and operational constraints of data centers in Indonesia.

Retrofit or New Build: The Crucial Choice

For many operators in Indonesia, retrofitting is the fastest, most feasible solution to scaling for AI. However, it comes with hurdles:

- Electrical Overhauls: Most existing facilities operate on 200-250A panels, far below the power requirements for AI workloads. Upgrades require 400A+ systems, new transformers, and rewiring—a costly and disruptive process.

- Cooling System Upgrades: Traditional air-cooled infrastructure is no match for high-density AI racks. Integrating liquid cooling means modifying existing mechanical systems, which can be complex and space-restrictive.

- Structural Reinforcements: High-performance computing means heavier racks—6,000lbs+ in some cases. Many raised floors and structural supports weren’t designed for this weight, requiring costly reinforcements.

AI is forcing data centers in Indonesia to choose: retrofit existing facilities or embark on new builds. Both paths come with their own set of challenges and opportunities. Despite these challenges, retrofitting is often the most cost-effective approach, allowing operators to extend the life of existing facilities while incrementally adopting new technologies.

New Builds: Designing for the Future

When budgets allow, AI-native data centers provide unmatched long-term flexibility and efficiency. Purpose-built facilities incorporate scalable power and cooling—engineered to support 100kW+ racks from day one. They also integrate sustainability features—heat reuse, advanced liquid cooling, and reduced operational costs—and offer design flexibility—eliminating the need for retrofitting down the line. But not every operator in Indonesia has the time or capital to take this route. New builds require years to construct and depend on regional power availability—a growing bottleneck in key data center hubs in Indonesia.

The Decision: No One Can Afford to Wait

According to the 2024 State of the Data Center Report (relevant to the Indonesian context), the AI boom is forcing operators to rethink infrastructure strategies:

- Rack densities are skyrocketing—legacy data centers must adapt now or fall behind.

- Power grids are at capacity—some regions in Indonesia are already struggling to keep up.

- Sustainability pressures are increasing—operators must factor in long-term efficiency and regulatory compliance.

In the face of this rapid change, standing still isn’t an option. The question isn’t whether AI will keep growing—it’s whether the industry in Indonesia can mature fast enough to support it. Companies like Climanusa, as a proactive Distributor Cooling Data Center, are at the forefront of providing the necessary solutions for this adaptation.

The Future of AI-Ready Data Centers

The data center industry in Indonesia is splitting into two paths—and the divide is widening fast. On one side, hyperscalers are racing toward 100kW+ racks, scaling with liquid-cooled infrastructure designed for AI from the ground up. On the other, most businesses are stuck, trying to squeeze more performance out of outdated data centers barely supporting 10kW per rack. This isn’t just a power problem—it’s a design problem. Purpose-built, AI-ready data centers are proving that sustainability and high performance can go hand-in-hand. Some are pairing solar farms with liquid-cooled racks to cut energy costs. Others are capturing waste heat to warm nearby buildings, turning excess energy into revenue. Solutions like Climanusa’s EcoCore COOL CDU are showing that it’s possible to boost rack density fivefold without ripping and replacing entire facilities.

But progress is being held back by secrecy. Instead of collaborating, operators hoard data on cooling performance, power usage, and AI energy efficiency—fearing that sharing insights will give competitors an edge. Without transparency, everyone is reinventing the wheel. And regulators are taking notice. The Indonesian government, like the EU, may soon mandate strict energy efficiency rules for data centers. As an expert, puts it: “If we don’t agree on standards like ASHRAE or NEC soon, regulators will do it for us. And no one wants politicians dictating how to cool a server.” This underscores the urgency for Distributor Cooling Data Center and the entire data center ecosystem in Indonesia to collaborate and innovate.

Conclusion

AI is unstoppable. Are data centers? Predicting AI’s trajectory is impossible. But one thing is certain: data centers that fail to adapt will be obsolete. Operators in Indonesia can lead the charge now, collaborating on AI-first, liquid-cooled infrastructure, or they can wait until regulators dictate the future for them. The technology exists. The solutions are here. Climanusa’s EcoCore COOL CDU proves it. The question isn’t if AI will keep growing. It’s whether the industry will move fast enough to support it. Because standing still isn’t an option. “We can’t even see the horizon,” says them. “AI’s full capabilities are still emerging—we’re in the front end of a very dark tunnel.” So, for the future of AI-ready data centers in Indonesia, partner with a leading Distributor Cooling Data Center to ensure you are prepared.

Climanusa is your premier choice for innovative and robust data center cooling solutions in the AI era.

For more information, please click here.

–A.M.G–